Monkeys have vocal tools, but not brains, to talk like humans

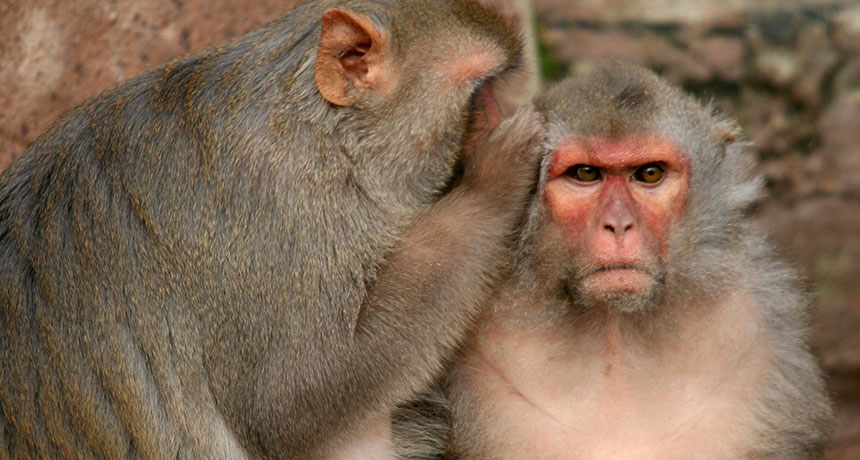

Macaque monkeys would be quite talkative if only their brains cooperated with their airways, a new study suggests.

These primates possess the vocal equipment to speak much as people do, say evolutionary biologist and cognitive scientist W. Tecumseh Fitch of the University of Vienna and colleagues. But macaques lack brains capable of transforming that vocal potential into human talk. As a result, the monkeys communicate with grunts, coos and other similar sounds, the scientists conclude December 9 in Science Advances.

“Macaques have a speech-ready vocal tract but lack a speech-ready brain to control it,” Fitch says.

His team took X-ray videos of an adult macaque’s vocal tract while the animal cooed, grunted, made threatening sounds, smacked its lips, yawned and ate various foods. Measures of shifting shapes during these vocalizations allowed the researchers to estimate what types of speech sounds the monkey could potentially utter.

Monkeys, and presumably apes, have mouths, vocal cords and other vocal tract elements capable of articulating at least five vowel sounds, the researchers say. These consist of vowel pronunciations heard in the words bit, bet, bat, but and bought.

Consonant sounds within monkeys’ reach include those corresponding to the letters p, b, k, g, h, m and w, the scientists add.

An animal that can voice those vowels and consonants is capable of making understandable statements in English and many other languages, they conclude.

The new findings expand monkeys’ gab potential beyond that described in a pioneering 1969 study led by anthropologist and cognitive scientist Philip Lieberman, Fitch claims. Lieberman, now at Brown University in Providence, R.I., devised a computer model of a macaque’s speech potential based on measures of a cadaver monkey’s vocal tract.

Lieberman regards the new study as a replication of his 1969 report. Both investigations find that monkeys can emit a partial range of vowel sounds, Lieberman says. Each paper also determines that two especially distinctive vowel sounds, found in the words beet and boot, lie outside macaques’ vocal realm.

Hearing those sounds is another issue. Acoustic properties of the vowel sounds monkeys can produce make them relatively difficult for people to identify while listening to someone talk, Lieberman emphasizes. “If monkeys had humanlike brains, they could talk, but their speech would sound indistinct,” he says.

Fitch disagrees. By studying a living monkey’s vocal tract in action, the new study finds that these animals can make a broader range of sounds related to each of the five key vowels than reported by Lieberman, he argues. A talking monkey “would be distinct enough to understand, no worse than a foreign accent,” Fitch says. A computer-generated version of the spoken phrase “Will you marry me?” — based on newly calculated properties of the macaque’s vocal tract — is easily grasped by a listener, although less clear than the same phrase spoken by a human female, Fitch says.

Fitch and colleagues confirm a growing body of evidence that monkeys have speech-ready vocal tracts, says biological anthropologist Adriano Lameira of Durham University in England. It’s too soon, though, to say that monkeys’ brains aren’t at least partially speech-ready, he argues. Recent studies of apes, some conducted by Lameira, find that these close relatives of humans exert considerable control over their vocal tracts, allowing them to learn novel calls containing sounds similar to vowels and consonants. Neural control of various parts of the vocal tract is needed to master these sounds, Lameira says. Little is known about whether monkeys can do the same.

An aptitude for incorporating new sounds into vocal communication possibly originated in ancient primates, laying the evolutionary groundwork for human speech, Lameira proposes.